AWS Backup & Disaster Recovery Strategies

Protect your business with comprehensive backup and disaster recovery strategies using AWS Backup, cross-region replication, and automated recovery procedures

Introduction

Data loss and service disruptions can be catastrophic for any business. Whether caused by human error, cyberattacks, natural disasters, or system failures, the impact can range from temporary inconvenience to complete business failure. A robust backup and disaster recovery (DR) strategy is not just a technical requirement – it’s a business imperative.

This comprehensive guide explores AWS backup and disaster recovery strategies, from basic backup automation to multi-region disaster recovery architectures. You’ll learn how to design solutions that meet your Recovery Time Objective (RTO) and Recovery Point Objective (RPO) requirements while optimizing costs.

What You’ll Learn:

- Understanding RTO, RPO, and recovery strategies

- AWS Backup service for centralized backup management

- Cross-region replication strategies for S3, RDS, and DynamoDB

- Disaster recovery patterns: Backup & Restore, Pilot Light, Warm Standby, Hot Standby

- Automating backup policies with AWS CDK and Python

- Testing and validating recovery procedures

- Cost optimization for backup and DR solutions

Understanding RTO and RPO

Recovery Time Objective (RTO)

RTO is the maximum acceptable time that an application can be down after a disaster occurs. It answers: “How quickly must we recover?”

Examples:

- E-commerce site: RTO of 1 hour (lost sales, reputation damage)

- Internal reporting system: RTO of 24 hours (less critical)

- Banking application: RTO of minutes (regulatory requirements)

Recovery Point Objective (RPO)

RPO is the maximum acceptable amount of data loss measured in time. It answers: “How much data can we afford to lose?”

Examples:

- Financial trading system: RPO of seconds (every transaction matters)

- Blog website: RPO of 24 hours (daily backups acceptable)

- Data warehouse: RPO of 1 hour (hourly snapshots)

RTO vs RPO Cost Trade-offs

Higher Availability = Higher Cost

RPO/RTO Strategy AWS Services Monthly Cost (estimate)

────────────────────────────────────────────────────────────────────────────

Days Backup & Restore S3, Glacier, AWS Backup $50-500

Hours Pilot Light S3, RDS standby, Route53 $500-2000

Minutes Warm Standby Multi-AZ, Read Replica $2000-10000

Seconds Hot Standby Active-Active, Multi-Reg $10000+AWS Backup Service

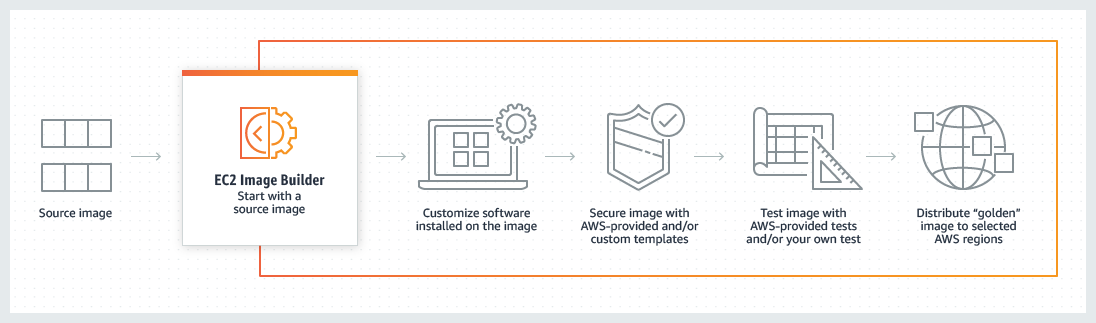

Introduction to AWS Backup

AWS Backup is a fully managed service that centralizes and automates data protection across AWS services including:

- Amazon EC2 (EBS volumes)

- Amazon RDS and Aurora

- Amazon DynamoDB

- Amazon EFS

- Amazon FSx

- AWS Storage Gateway

- Amazon S3

Key Benefits:

- Centralized management: Single console for all backups

- Automated backup scheduling: Policy-based backup plans

- Cross-region backup: Automatic replication to different regions

- Compliance reporting: Track backup compliance requirements

- Lifecycle management: Transition to cold storage automatically

- Encryption: All backups encrypted at rest and in transit

Creating Backup Plans with AWS CDK

// lib/backup-stack.ts

import * as cdk from 'aws-cdk-lib';

import * as backup from 'aws-cdk-lib/aws-backup';

import * as events from 'aws-cdk-lib/aws-events';

import * as iam from 'aws-cdk-lib/aws-iam';

import * as rds from 'aws-cdk-lib/aws-rds';

import * as ec2 from 'aws-cdk-lib/aws-ec2';

import { Construct } from 'constructs';

export class BackupStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// Create backup vault

const backupVault = new backup.BackupVault(this, 'PrimaryBackupVault', {

backupVaultName: 'primary-backup-vault',

// Enable encryption with KMS

encryptionKey: new cdk.aws_kms.Key(this, 'BackupKey', {

description: 'KMS key for backup encryption',

enableKeyRotation: true,

removalPolicy: cdk.RemovalPolicy.RETAIN,

}),

// Prevent accidental deletion

removalPolicy: cdk.RemovalPolicy.RETAIN,

});

// Create backup vault for cross-region replication

const drBackupVault = new backup.BackupVault(this, 'DRBackupVault', {

backupVaultName: 'dr-backup-vault-eu-west-1',

removalPolicy: cdk.RemovalPolicy.RETAIN,

});

// Daily backup plan with lifecycle rules

const dailyBackupPlan = new backup.BackupPlan(this, 'DailyBackupPlan', {

backupPlanName: 'daily-backup-plan',

backupPlanRules: [

new backup.BackupPlanRule({

ruleName: 'DailyBackups',

backupVault,

// Schedule: Daily at 2 AM UTC

scheduleExpression: events.Schedule.cron({

hour: '2',

minute: '0',

}),

// Start backup within 1 hour of schedule

startWindow: cdk.Duration.hours(1),

// Complete backup within 2 hours

completionWindow: cdk.Duration.hours(2),

// Lifecycle: Transition to cold storage after 30 days

moveToColdStorageAfter: cdk.Duration.days(30),

// Delete after 90 days

deleteAfter: cdk.Duration.days(90),

// Copy to DR region

copyActions: [

{

destinationBackupVault: drBackupVault,

moveToColdStorageAfter: cdk.Duration.days(30),

deleteAfter: cdk.Duration.days(90),

},

],

}),

],

});

// Hourly backup plan for critical databases

const hourlyBackupPlan = new backup.BackupPlan(this, 'HourlyBackupPlan', {

backupPlanName: 'hourly-critical-backup-plan',

backupPlanRules: [

new backup.BackupPlanRule({

ruleName: 'HourlyBackups',

backupVault,

// Schedule: Every hour

scheduleExpression: events.Schedule.rate(cdk.Duration.hours(1)),

startWindow: cdk.Duration.minutes(30),

completionWindow: cdk.Duration.hours(1),

// Keep hourly backups for 7 days

deleteAfter: cdk.Duration.days(7),

// Copy to DR region immediately

copyActions: [

{

destinationBackupVault: drBackupVault,

deleteAfter: cdk.Duration.days(7),

},

],

}),

],

});

// Weekly backup plan with long retention

const weeklyBackupPlan = new backup.BackupPlan(this, 'WeeklyBackupPlan', {

backupPlanName: 'weekly-long-term-backup-plan',

backupPlanRules: [

new backup.BackupPlanRule({

ruleName: 'WeeklyBackups',

backupVault,

// Schedule: Every Sunday at 3 AM UTC

scheduleExpression: events.Schedule.cron({

weekDay: 'SUN',

hour: '3',

minute: '0',

}),

startWindow: cdk.Duration.hours(2),

completionWindow: cdk.Duration.hours(4),

// Transition to cold storage after 7 days

moveToColdStorageAfter: cdk.Duration.days(7),

// Keep for 1 year

deleteAfter: cdk.Duration.days(365),

copyActions: [

{

destinationBackupVault: drBackupVault,

moveToColdStorageAfter: cdk.Duration.days(7),

deleteAfter: cdk.Duration.days(365),

},

],

}),

],

});

// Backup selection: Tag-based resource selection

// Daily backups for all production resources

dailyBackupPlan.addSelection('ProductionResources', {

backupSelection: {

selectionName: 'production-daily-backup',

iamRole: new iam.Role(this, 'BackupRole', {

assumedBy: new iam.ServicePrincipal('backup.amazonaws.com'),

managedPolicies: [

iam.ManagedPolicy.fromAwsManagedPolicyName(

'service-role/AWSBackupServiceRolePolicyForBackup'

),

iam.ManagedPolicy.fromAwsManagedPolicyName(

'service-role/AWSBackupServiceRolePolicyForRestores'

),

],

}),

},

resources: [

// Select resources by tag

backup.BackupResource.fromTag('Environment', 'production'),

backup.BackupResource.fromTag('Backup', 'daily'),

],

});

// Hourly backups for critical databases

hourlyBackupPlan.addSelection('CriticalDatabases', {

resources: [

backup.BackupResource.fromTag('Criticality', 'critical'),

backup.BackupResource.fromTag('Backup', 'hourly'),

],

});

// Weekly backups for long-term retention

weeklyBackupPlan.addSelection('LongTermRetention', {

resources: [

backup.BackupResource.fromTag('Backup', 'weekly'),

],

});

// CloudWatch alarm for failed backups

const backupFailureAlarm = new cdk.aws_cloudwatch.Alarm(

this,

'BackupFailureAlarm',

{

alarmName: 'backup-job-failure',

metric: new cdk.aws_cloudwatch.Metric({

namespace: 'AWS/Backup',

metricName: 'NumberOfBackupJobsFailed',

statistic: 'Sum',

period: cdk.Duration.hours(1),

}),

threshold: 1,

evaluationPeriods: 1,

comparisonOperator:

cdk.aws_cloudwatch.ComparisonOperator.GREATER_THAN_OR_EQUAL_TO_THRESHOLD,

treatMissingData: cdk.aws_cloudwatch.TreatMissingData.NOT_BREACHING,

}

);

// SNS topic for backup notifications

const backupTopic = new cdk.aws_sns.Topic(this, 'BackupNotifications', {

displayName: 'Backup Job Notifications',

});

backupFailureAlarm.addAlarmAction(

new cdk.aws_cloudwatch_actions.SnsAction(backupTopic)

);

// Outputs

new cdk.CfnOutput(this, 'BackupVaultArn', {

value: backupVault.backupVaultArn,

description: 'Primary backup vault ARN',

});

new cdk.CfnOutput(this, 'DailyBackupPlanId', {

value: dailyBackupPlan.backupPlanId,

description: 'Daily backup plan ID',

});

}

}Python Script for Backup Automation

# scripts/backup_automation.py

import boto3

import json

from datetime import datetime, timedelta

from typing import List, Dict

class AWSBackupManager:

"""Manage AWS Backup operations programmatically"""

def __init__(self, region: str = 'eu-central-1'):

self.backup_client = boto3.client('backup', region_name=region)

self.ec2_client = boto3.client('ec2', region_name=region)

self.rds_client = boto3.client('rds', region_name=region)

self.region = region

def create_on_demand_backup(

self,

resource_arn: str,

backup_vault_name: str,

iam_role_arn: str,

backup_name: str = None

) -> str:

"""

Create an on-demand backup for a specific resource

Args:

resource_arn: ARN of the resource to backup

backup_vault_name: Name of the backup vault

iam_role_arn: IAM role ARN for backup service

backup_name: Optional custom backup name

Returns:

Backup job ID

"""

if not backup_name:

timestamp = datetime.now().strftime('%Y%m%d-%H%M%S')

backup_name = f"on-demand-backup-{timestamp}"

try:

response = self.backup_client.start_backup_job(

BackupVaultName=backup_vault_name,

ResourceArn=resource_arn,

IamRoleArn=iam_role_arn,

IdempotencyToken=backup_name,

StartWindowMinutes=60,

CompleteWindowMinutes=120,

Lifecycle={

'MoveToColdStorageAfterDays': 30,

'DeleteAfterDays': 90

}

)

backup_job_id = response['BackupJobId']

print(f"Backup job started: {backup_job_id}")

return backup_job_id

except Exception as e:

print(f"Error creating backup: {str(e)}")

raise

def list_backup_jobs(

self,

backup_vault_name: str = None,

state: str = None,

max_results: int = 100

) -> List[Dict]:

"""

List backup jobs with optional filters

Args:

backup_vault_name: Filter by backup vault

state: Filter by job state (CREATED, PENDING, RUNNING, COMPLETED, FAILED, ABORTED)

max_results: Maximum number of results

Returns:

List of backup jobs

"""

params = {'MaxResults': max_results}

if backup_vault_name:

params['ByBackupVaultName'] = backup_vault_name

if state:

params['ByState'] = state

try:

response = self.backup_client.list_backup_jobs(**params)

return response.get('BackupJobs', [])

except Exception as e:

print(f"Error listing backup jobs: {str(e)}")

raise

def restore_from_backup(

self,

recovery_point_arn: str,

iam_role_arn: str,

metadata: Dict,

resource_type: str

) -> str:

"""

Restore a resource from a backup recovery point

Args:

recovery_point_arn: ARN of the recovery point

iam_role_arn: IAM role ARN for restore service

metadata: Restore metadata (varies by resource type)

resource_type: Type of resource (EBS, RDS, etc.)

Returns:

Restore job ID

"""

try:

response = self.backup_client.start_restore_job(

RecoveryPointArn=recovery_point_arn,

IamRoleArn=iam_role_arn,

Metadata=metadata,

ResourceType=resource_type

)

restore_job_id = response['RestoreJobId']

print(f"Restore job started: {restore_job_id}")

return restore_job_id

except Exception as e:

print(f"Error starting restore: {str(e)}")

raise

def get_recovery_points(

self,

backup_vault_name: str,

resource_arn: str = None

) -> List[Dict]:

"""

List available recovery points in a backup vault

Args:

backup_vault_name: Name of the backup vault

resource_arn: Optional filter by resource ARN

Returns:

List of recovery points

"""

params = {'BackupVaultName': backup_vault_name}

if resource_arn:

params['ByResourceArn'] = resource_arn

try:

response = self.backup_client.list_recovery_points_by_backup_vault(

**params

)

return response.get('RecoveryPoints', [])

except Exception as e:

print(f"Error listing recovery points: {str(e)}")

raise

def tag_resources_for_backup(

self,

resource_ids: List[str],

resource_type: str,

backup_frequency: str = 'daily'

) -> None:

"""

Tag resources for automated backup

Args:

resource_ids: List of resource IDs to tag

resource_type: Type of resource (instance, volume, db-instance)

backup_frequency: Backup frequency (hourly, daily, weekly)

"""

tags = [

{'Key': 'Backup', 'Value': backup_frequency},

{'Key': 'ManagedBy', 'Value': 'AWSBackup'}

]

try:

if resource_type == 'instance':

self.ec2_client.create_tags(

Resources=resource_ids,

Tags=tags

)

elif resource_type == 'db-instance':

for db_id in resource_ids:

self.rds_client.add_tags_to_resource(

ResourceName=db_id,

Tags=tags

)

print(f"Tagged {len(resource_ids)} {resource_type}(s) for {backup_frequency} backup")

except Exception as e:

print(f"Error tagging resources: {str(e)}")

raise

def generate_backup_report(

self,

backup_vault_name: str,

days: int = 7

) -> Dict:

"""

Generate backup compliance report

Args:

backup_vault_name: Name of the backup vault

days: Number of days to look back

Returns:

Report dictionary with statistics

"""

cutoff_date = datetime.now() - timedelta(days=days)

# Get all backup jobs in timeframe

jobs = self.list_backup_jobs(backup_vault_name=backup_vault_name)

# Filter by date

recent_jobs = [

job for job in jobs

if datetime.fromisoformat(

job['CreationDate'].replace('Z', '+00:00')

) > cutoff_date

]

# Calculate statistics

total_jobs = len(recent_jobs)

completed = len([j for j in recent_jobs if j['State'] == 'COMPLETED'])

failed = len([j for j in recent_jobs if j['State'] == 'FAILED'])

running = len([j for j in recent_jobs if j['State'] == 'RUNNING'])

total_size = sum(

job.get('BackupSizeInBytes', 0)

for job in recent_jobs

if job['State'] == 'COMPLETED'

)

report = {

'vault_name': backup_vault_name,

'period_days': days,

'total_jobs': total_jobs,

'completed_jobs': completed,

'failed_jobs': failed,

'running_jobs': running,

'success_rate': f"{(completed/total_jobs*100):.2f}%" if total_jobs > 0 else "0%",

'total_backup_size_gb': f"{total_size / (1024**3):.2f}",

'generated_at': datetime.now().isoformat()

}

return report

# Example usage

if __name__ == '__main__':

# Initialize backup manager

backup_mgr = AWSBackupManager(region='eu-central-1')

# Create on-demand backup for RDS instance

rds_arn = "arn:aws:rds:eu-central-1:123456789012:db:production-db"

iam_role = "arn:aws:iam::123456789012:role/AWSBackupServiceRole"

job_id = backup_mgr.create_on_demand_backup(

resource_arn=rds_arn,

backup_vault_name='primary-backup-vault',

iam_role_arn=iam_role,

backup_name='production-db-manual-backup'

)

# Generate backup report

report = backup_mgr.generate_backup_report(

backup_vault_name='primary-backup-vault',

days=7

)

print("\n=== Backup Report ===")

print(json.dumps(report, indent=2))

# Tag EC2 instances for daily backup

instance_ids = ['i-1234567890abcdef0', 'i-0987654321fedcba0']

backup_mgr.tag_resources_for_backup(

resource_ids=instance_ids,

resource_type='instance',

backup_frequency='daily'

)Cross-Region Replication Strategies

S3 Cross-Region Replication

// lib/s3-replication-stack.ts

import * as cdk from 'aws-cdk-lib';

import * as s3 from 'aws-cdk-lib/aws-s3';

import * as iam from 'aws-cdk-lib/aws-iam';

import { Construct } from 'constructs';

export class S3ReplicationStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// Source bucket in primary region (eu-central-1)

const sourceBucket = new s3.Bucket(this, 'SourceBucket', {

bucketName: 'forrict-production-data-primary',

versioned: true, // Required for replication

encryption: s3.BucketEncryption.S3_MANAGED,

lifecycleRules: [

{

// Transition old versions to Glacier after 30 days

noncurrentVersionTransitions: [

{

storageClass: s3.StorageClass.GLACIER,

transitionAfter: cdk.Duration.days(30),

},

],

// Delete old versions after 90 days

noncurrentVersionExpiration: cdk.Duration.days(90),

},

],

removalPolicy: cdk.RemovalPolicy.RETAIN,

});

// Destination bucket in DR region (eu-west-1)

const destinationBucket = new s3.Bucket(this, 'DestinationBucket', {

bucketName: 'forrict-production-data-dr',

versioned: true,

encryption: s3.BucketEncryption.S3_MANAGED,

removalPolicy: cdk.RemovalPolicy.RETAIN,

});

// IAM role for replication

const replicationRole = new iam.Role(this, 'ReplicationRole', {

assumedBy: new iam.ServicePrincipal('s3.amazonaws.com'),

path: '/service-role/',

});

// Grant permissions to source bucket

sourceBucket.grantRead(replicationRole);

// Grant permissions to destination bucket

destinationBucket.grantWrite(replicationRole);

// Add replication configuration

const cfnSourceBucket = sourceBucket.node.defaultChild as s3.CfnBucket;

cfnSourceBucket.replicationConfiguration = {

role: replicationRole.roleArn,

rules: [

{

id: 'replicate-all-objects',

status: 'Enabled',

priority: 1,

filter: {

// Replicate all objects

prefix: '',

},

destination: {

bucket: destinationBucket.bucketArn,

// Replicate storage class

storageClass: 'STANDARD',

// Enable replication time control (15 minutes SLA)

replicationTime: {

status: 'Enabled',

time: {

minutes: 15,

},

},

// Enable metrics for monitoring

metrics: {

status: 'Enabled',

eventThreshold: {

minutes: 15,

},

},

},

// Delete marker replication

deleteMarkerReplication: {

status: 'Enabled',

},

},

],

};

// CloudWatch alarm for replication lag

const replicationAlarm = new cdk.aws_cloudwatch.Alarm(

this,

'ReplicationLagAlarm',

{

alarmName: 's3-replication-lag',

metric: new cdk.aws_cloudwatch.Metric({

namespace: 'AWS/S3',

metricName: 'ReplicationLatency',

dimensionsMap: {

SourceBucket: sourceBucket.bucketName,

DestinationBucket: destinationBucket.bucketName,

},

statistic: 'Maximum',

period: cdk.Duration.minutes(5),

}),

// Alert if replication takes longer than 30 minutes

threshold: 1800, // seconds

evaluationPeriods: 2,

}

);

// Outputs

new cdk.CfnOutput(this, 'SourceBucketName', {

value: sourceBucket.bucketName,

});

new cdk.CfnOutput(this, 'DestinationBucketName', {

value: destinationBucket.bucketName,

});

}

}RDS Cross-Region Read Replica

// lib/rds-replica-stack.ts

import * as cdk from 'aws-cdk-lib';

import * as rds from 'aws-cdk-lib/aws-rds';

import * as ec2 from 'aws-cdk-lib/aws-ec2';

import * as secretsmanager from 'aws-cdk-lib/aws-secretsmanager';

import { Construct } from 'constructs';

export class RDSReplicaStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// VPC for database

const vpc = ec2.Vpc.fromLookup(this, 'VPC', {

vpcId: 'vpc-xxxxx', // Your VPC ID

});

// Create database credentials secret

const databaseCredentials = new secretsmanager.Secret(

this,

'DatabaseCredentials',

{

secretName: 'production-db-credentials',

generateSecretString: {

secretStringTemplate: JSON.stringify({ username: 'admin' }),

generateStringKey: 'password',

excludePunctuation: true,

includeSpace: false,

passwordLength: 32,

},

}

);

// Primary RDS instance in eu-central-1

const primaryDatabase = new rds.DatabaseInstance(this, 'PrimaryDatabase', {

engine: rds.DatabaseInstanceEngine.postgres({

version: rds.PostgresEngineVersion.VER_15_4,

}),

instanceType: ec2.InstanceType.of(

ec2.InstanceClass.R6G,

ec2.InstanceSize.XLARGE

),

credentials: rds.Credentials.fromSecret(databaseCredentials),

vpc,

vpcSubnets: {

subnetType: ec2.SubnetType.PRIVATE_ISOLATED,

},

multiAz: true, // Multi-AZ for high availability

allocatedStorage: 100,

maxAllocatedStorage: 500, // Enable storage autoscaling

storageType: rds.StorageType.GP3,

storageEncrypted: true,

databaseName: 'production',

backupRetention: cdk.Duration.days(7),

preferredBackupWindow: '03:00-04:00', // 3-4 AM UTC

preferredMaintenanceWindow: 'Sun:04:00-Sun:05:00',

deletionProtection: true,

cloudwatchLogsExports: ['postgresql'],

parameterGroup: new rds.ParameterGroup(this, 'ParameterGroup', {

engine: rds.DatabaseInstanceEngine.postgres({

version: rds.PostgresEngineVersion.VER_15_4,

}),

parameters: {

// Enable automatic backups to S3

'rds.force_ssl': '1',

'log_connections': '1',

'log_disconnections': '1',

'log_duration': '1',

},

}),

removalPolicy: cdk.RemovalPolicy.SNAPSHOT,

});

// Create read replica in DR region (eu-west-1)

const readReplica = new rds.DatabaseInstanceReadReplica(

this,

'ReadReplica',

{

sourceDatabaseInstance: primaryDatabase,

instanceType: ec2.InstanceType.of(

ec2.InstanceClass.R6G,

ec2.InstanceSize.XLARGE

),

vpc,

// Can be in different AZ or region

availabilityZone: 'eu-west-1a',

storageEncrypted: true,

// Read replica can have different backup retention

backupRetention: cdk.Duration.days(7),

deletionProtection: true,

removalPolicy: cdk.RemovalPolicy.SNAPSHOT,

}

);

// CloudWatch alarms

const cpuAlarm = new cdk.aws_cloudwatch.Alarm(this, 'CPUAlarm', {

alarmName: 'rds-high-cpu',

metric: primaryDatabase.metricCPUUtilization(),

threshold: 80,

evaluationPeriods: 2,

});

const replicationLagAlarm = new cdk.aws_cloudwatch.Alarm(

this,

'ReplicationLagAlarm',

{

alarmName: 'rds-replication-lag',

metric: readReplica.metric('ReplicaLag', {

statistic: 'Average',

period: cdk.Duration.minutes(1),

}),

// Alert if replication lag exceeds 60 seconds

threshold: 60,

evaluationPeriods: 3,

}

);

// Outputs

new cdk.CfnOutput(this, 'PrimaryDatabaseEndpoint', {

value: primaryDatabase.dbInstanceEndpointAddress,

description: 'Primary database endpoint',

});

new cdk.CfnOutput(this, 'ReadReplicaEndpoint', {

value: readReplica.dbInstanceEndpointAddress,

description: 'Read replica endpoint',

});

new cdk.CfnOutput(this, 'DatabaseSecretArn', {

value: databaseCredentials.secretArn,

description: 'Database credentials secret ARN',

});

}

}Disaster Recovery Patterns

Pattern 1: Backup and Restore (RPO: Hours, RTO: Hours)

Use Case: Non-critical applications where several hours of downtime is acceptable

Architecture:

- Regular backups to S3/Glacier

- No resources running in DR region

- Lowest cost option

Cost: $50-500/month

Recovery Process:

- Detect outage in primary region

- Retrieve backups from S3

- Launch infrastructure in DR region using IaC

- Restore data from backups

- Update DNS to point to DR region

Pattern 2: Pilot Light (RPO: Minutes, RTO: 10-30 minutes)

Use Case: Production applications with moderate RTO/RPO requirements

Architecture:

- Core infrastructure running in DR region (databases, minimal compute)

- Data continuously replicated

- Additional resources provisioned during disaster

Cost: $500-2000/month

// lib/pilot-light-stack.ts

import * as cdk from 'aws-cdk-lib';

import * as rds from 'aws-cdk-lib/aws-rds';

import * as route53 from 'aws-cdk-lib/aws-route53';

import * as ec2 from 'aws-cdk-lib/aws-ec2';

import { Construct } from 'constructs';

export class PilotLightStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// Primary region: Full application stack

// DR region: Only database replica + minimal infrastructure

const vpc = ec2.Vpc.fromLookup(this, 'VPC', {

isDefault: false,

});

// Database read replica (always running in DR region)

const drDatabase = new rds.DatabaseInstanceReadReplica(

this,

'DRDatabase',

{

sourceDatabaseInstance: rds.DatabaseInstance.fromDatabaseInstanceAttributes(

this,

'SourceDB',

{

instanceIdentifier: 'primary-database',

instanceEndpointAddress: 'primary-db.xxxxx.eu-central-1.rds.amazonaws.com',

port: 5432,

securityGroups: [],

}

),

instanceType: ec2.InstanceType.of(

ec2.InstanceClass.T4G,

ec2.InstanceSize.SMALL // Smaller instance in DR region

),

vpc,

}

);

// Auto Scaling Group (launch configuration only, no instances)

// Scale out during disaster

const launchTemplate = new ec2.LaunchTemplate(this, 'DRLaunchTemplate', {

instanceType: ec2.InstanceType.of(

ec2.InstanceClass.T4G,

ec2.InstanceSize.MEDIUM

),

machineImage: ec2.MachineImage.latestAmazonLinux2023(),

userData: ec2.UserData.forLinux(),

});

const asg = new cdk.aws_autoscaling.AutoScalingGroup(this, 'DRASG', {

vpc,

launchTemplate,

minCapacity: 0, // No instances normally

maxCapacity: 10,

desiredCapacity: 0, // Scale to 5 during disaster

});

// Route53 health check and failover

const hostedZone = route53.HostedZone.fromLookup(this, 'HostedZone', {

domainName: 'forrict.nl',

});

// Primary region record (with health check)

const primaryRecord = new route53.ARecord(this, 'PrimaryRecord', {

zone: hostedZone,

recordName: 'app',

target: route53.RecordTarget.fromIpAddresses('1.2.3.4'),

ttl: cdk.Duration.seconds(60),

});

// DR region record (failover)

const drRecord = new route53.ARecord(this, 'DRRecord', {

zone: hostedZone,

recordName: 'app',

target: route53.RecordTarget.fromAlias({

bind: () => ({

hostedZoneId: 'Z123456',

dnsName: asg.loadBalancer.loadBalancerDnsName,

}),

}),

ttl: cdk.Duration.seconds(60),

});

// Lambda function to promote read replica to standalone

const promoteReplicaFunction = new cdk.aws_lambda.Function(

this,

'PromoteReplica',

{

runtime: cdk.aws_lambda.Runtime.PYTHON_3_11,

handler: 'index.handler',

code: cdk.aws_lambda.Code.fromInline(`

import boto3

import os

rds = boto3.client('rds')

def handler(event, context):

db_instance_id = os.environ['DB_INSTANCE_ID']

# Promote read replica to standalone instance

response = rds.promote_read_replica(

DBInstanceIdentifier=db_instance_id

)

print(f"Promoted read replica: {db_instance_id}")

return {

'statusCode': 200,

'body': f"Promoted {db_instance_id} to standalone instance"

}

`),

environment: {

DB_INSTANCE_ID: drDatabase.instanceIdentifier,

},

timeout: cdk.Duration.minutes(5),

}

);

// Grant permissions to promote replica

drDatabase.grantConnect(promoteReplicaFunction);

promoteReplicaFunction.addToRolePolicy(

new cdk.aws_iam.PolicyStatement({

actions: ['rds:PromoteReadReplica'],

resources: [drDatabase.instanceArn],

})

);

}

}Pattern 3: Warm Standby (RPO: Seconds, RTO: Minutes)

Use Case: Business-critical applications requiring minimal downtime

Architecture:

- Scaled-down version of full environment running in DR region

- Data synchronized in real-time

- Can handle some production traffic

Cost: $2000-10000/month

Pattern 4: Hot Standby / Active-Active (RPO: None, RTO: Seconds)

Use Case: Mission-critical applications with zero tolerance for downtime

Architecture:

- Full production environment in multiple regions

- Active-active configuration

- Real-time data replication

- Global load balancing

Cost: $10000+/month

Testing and Validation

Automated DR Testing Script

# scripts/dr_testing.py

import boto3

import time

from typing import Dict, List

from datetime import datetime

class DRTester:

"""Automated disaster recovery testing"""

def __init__(self, primary_region: str, dr_region: str):

self.primary_region = primary_region

self.dr_region = dr_region

self.backup_client = boto3.client('backup', region_name=dr_region)

self.rds_client = boto3.client('rds', region_name=dr_region)

self.ec2_client = boto3.client('ec2', region_name=dr_region)

def test_backup_restore(

self,

recovery_point_arn: str,

test_instance_name: str

) -> Dict:

"""

Test restoring from backup

Returns test results and metrics

"""

print("Starting DR restore test...")

start_time = datetime.now()

try:

# Start restore job

response = self.backup_client.start_restore_job(

RecoveryPointArn=recovery_point_arn,

Metadata={

'DBInstanceIdentifier': test_instance_name,

'DBInstanceClass': 'db.t3.small',

'Engine': 'postgres',

},

IamRoleArn='arn:aws:iam::123456789012:role/AWSBackupServiceRole',

ResourceType='RDS'

)

restore_job_id = response['RestoreJobId']

print(f"Restore job started: {restore_job_id}")

# Monitor restore progress

while True:

job_status = self.backup_client.describe_restore_job(

RestoreJobId=restore_job_id

)

status = job_status['Status']

print(f"Restore status: {status}")

if status == 'COMPLETED':

break

elif status in ['FAILED', 'ABORTED']:

raise Exception(f"Restore failed with status: {status}")

time.sleep(30)

# Calculate RTO

end_time = datetime.now()

rto_seconds = (end_time - start_time).total_seconds()

# Verify restored instance

restored_resource = job_status['CreatedResourceArn']

verification_result = self._verify_database(test_instance_name)

# Clean up test instance

self._cleanup_test_resources(test_instance_name)

return {

'success': True,

'rto_seconds': rto_seconds,

'rto_minutes': round(rto_seconds / 60, 2),

'restored_resource': restored_resource,

'verification': verification_result,

'timestamp': datetime.now().isoformat()

}

except Exception as e:

return {

'success': False,

'error': str(e),

'timestamp': datetime.now().isoformat()

}

def _verify_database(self, db_instance_id: str) -> Dict:

"""Verify restored database is accessible"""

try:

response = self.rds_client.describe_db_instances(

DBInstanceIdentifier=db_instance_id

)

db = response['DBInstances'][0]

return {

'status': db['DBInstanceStatus'],

'endpoint': db.get('Endpoint', {}).get('Address'),

'engine': db['Engine'],

'storage_gb': db['AllocatedStorage'],

'accessible': db['DBInstanceStatus'] == 'available'

}

except Exception as e:

return {

'accessible': False,

'error': str(e)

}

def _cleanup_test_resources(self, db_instance_id: str) -> None:

"""Clean up test resources"""

try:

self.rds_client.delete_db_instance(

DBInstanceIdentifier=db_instance_id,

SkipFinalSnapshot=True,

DeleteAutomatedBackups=True

)

print(f"Cleaned up test instance: {db_instance_id}")

except Exception as e:

print(f"Error cleaning up: {str(e)}")

def test_failover(self, hosted_zone_id: str, record_name: str) -> Dict:

"""Test DNS failover"""

route53 = boto3.client('route53')

try:

# Get current DNS records

response = route53.list_resource_record_sets(

HostedZoneId=hosted_zone_id,

StartRecordName=record_name,

MaxItems='1'

)

original_records = response['ResourceRecordSets']

# Simulate failover by updating health check

# In production, health check would fail automatically

return {

'success': True,

'original_records': original_records,

'failover_tested': True

}

except Exception as e:

return {

'success': False,

'error': str(e)

}

# Example usage

if __name__ == '__main__':

tester = DRTester(

primary_region='eu-central-1',

dr_region='eu-west-1'

)

# Test backup restore

result = tester.test_backup_restore(

recovery_point_arn='arn:aws:backup:eu-west-1:123456789012:recovery-point:xxxxx',

test_instance_name='dr-test-instance'

)

print("\n=== DR Test Results ===")

print(f"Success: {result['success']}")

print(f"RTO: {result.get('rto_minutes', 'N/A')} minutes")

print(f"Verification: {result.get('verification', {})}")Best Practices

1. Backup Strategy

- Implement 3-2-1 rule: 3 copies, 2 different media, 1 offsite

- Automate backups with AWS Backup

- Test restores regularly (monthly minimum)

- Tag resources for automated backup

- Implement lifecycle policies to control costs

2. Data Protection

- Enable versioning on S3 buckets

- Use cross-region replication for critical data

- Encrypt all backups at rest and in transit

- Implement backup retention policies aligned with compliance

- Monitor backup job success rates

3. Recovery Planning

- Document RTO and RPO requirements for each workload

- Create detailed runbooks for recovery procedures

- Automate recovery where possible

- Practice failure scenarios (chaos engineering)

- Maintain up-to-date contact lists

4. Cost Optimization

- Use Glacier for long-term retention

- Implement intelligent tiering for S3

- Right-size DR infrastructure

- Use spot instances for DR testing

- Delete old backups automatically

5. Monitoring and Alerting

- Monitor backup job completion

- Alert on replication lag

- Track RTO/RPO compliance

- Monitor cross-region bandwidth costs

- Set up recovery time tracking

Conclusion

A comprehensive backup and disaster recovery strategy is essential for business continuity. AWS provides powerful tools like AWS Backup, cross-region replication, and multi-region architectures to protect your data and applications. The key is choosing the right DR pattern based on your RTO/RPO requirements and budget constraints.

Key Takeaways:

- Define clear RTO and RPO requirements for each workload

- Automate backups using AWS Backup service

- Implement cross-region replication for critical data

- Choose appropriate DR pattern (Backup & Restore, Pilot Light, Warm Standby, Hot Standby)

- Test recovery procedures regularly

- Monitor backup compliance and replication lag

Ready to implement a robust disaster recovery strategy? Forrict can help you design and implement backup and DR solutions tailored to your business requirements and compliance needs.

Resources

- AWS Backup Documentation

- AWS Disaster Recovery

- AWS Well-Architected Framework - Reliability Pillar

- S3 Cross-Region Replication

- RDS Read Replicas

Forrict Team

AWS expert and consultant at Forrict, specializing in cloud architecture and AWS best practices for Dutch businesses.