On-Premise to AWS Migration Roadmap: Complete Strategy Guide

Master the AWS migration journey with proven 6R strategies, comprehensive tooling, and real-world implementation patterns for successful cloud transformation

Introduction

Migrating from on-premise infrastructure to AWS is a transformative journey that requires careful planning, proven strategies, and the right tooling. Whether you’re moving a single application or an entire data center, understanding AWS’s migration framework and tools is essential for success.

This comprehensive guide provides a complete roadmap for on-premise to AWS migration, covering the 6R strategies, AWS migration tools, timeline planning, and hands-on implementation examples.

What You’ll Learn:

- AWS 6R migration strategies (Rehost, Replatform, Refactor, Repurchase, Retire, Retain)

- Migration phases: Assessment, Planning, Execution, Optimization

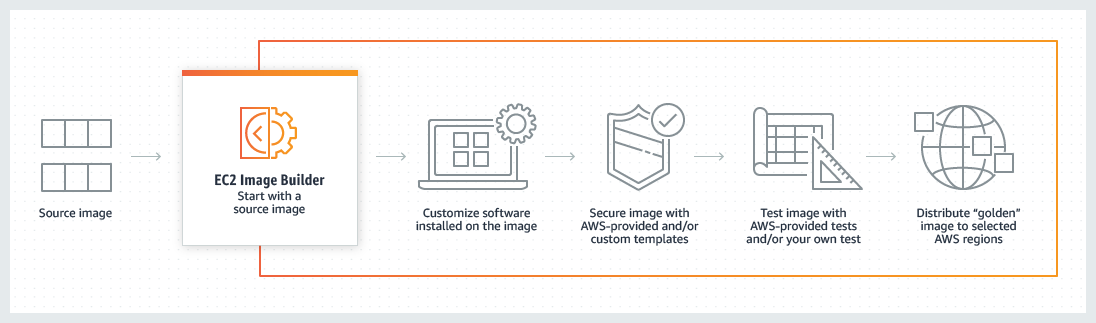

- AWS Migration Hub for centralized tracking

- Application Discovery Service for inventory management

- Database Migration Service (DMS) implementation

- AWS Application Migration Service (MGN) configuration

- Real-world migration timelines and cost optimization

- Post-migration optimization strategies

Understanding the 6R Migration Strategies

1. Rehost (Lift-and-Shift)

Description: Move applications to AWS without changes

When to Use:

- Tight migration deadlines

- Applications that work well as-is

- Minimal cloud optimization initially

- Quick data center exit required

Advantages:

- Fastest migration approach

- Lowest initial cost

- Minimal application risk

- Skills reuse from on-premise

Disadvantages:

- Misses cloud-native benefits

- May have higher long-term costs

- Limited scalability improvements

- Technical debt carried forward

Tools:

- AWS Application Migration Service (MGN)

- CloudEndure Migration

- VM Import/Export

Example Use Cases:

- Legacy ERP systems

- Monolithic applications

- Compliance-driven migrations

- POC/Pilot migrations

2. Replatform (Lift-Tinker-and-Shift)

Description: Make minor cloud optimizations without changing core architecture

When to Use:

- Want some cloud benefits without major changes

- Database can benefit from managed services

- Load balancing and autoscaling needed

- Moderate timeline available

Advantages:

- Some cloud optimization benefits

- Moderate migration speed

- Reduced operational overhead

- No major code changes needed

Disadvantages:

- Limited optimization compared to refactoring

- May still have architectural limitations

- Partial cloud-native adoption

Typical Changes:

- On-premise MySQL → Amazon RDS

- Self-managed Redis → Amazon ElastiCache

- Manual load balancing → Elastic Load Balancing

- Fixed capacity → Auto Scaling Groups

Example Use Cases:

- Web applications with databases

- Applications with stateful components

- Services needing high availability

3. Refactor/Re-architect

Description: Reimagine application architecture using cloud-native features

When to Use:

- Maximize cloud benefits

- Scalability requirements significant

- Microservices architecture desired

- Long-term cloud strategy

Advantages:

- Maximum cloud optimization

- Best scalability and resilience

- Reduced operational costs

- Modern architecture patterns

Disadvantages:

- Highest migration cost

- Longest timeline

- Requires significant development

- Business logic may need changes

Common Patterns:

- Monolith → Microservices (ECS/EKS)

- Server-based → Serverless (Lambda)

- Traditional queue → SQS/SNS

- Session state → DynamoDB/ElastiCache

Example Use Cases:

- SaaS applications

- High-traffic web applications

- Data-intensive workloads

- Greenfield development

4. Repurchase (Drop-and-Shop)

Description: Replace existing application with cloud-based SaaS alternative

When to Use:

- SaaS alternative available

- License costs too high

- Maintenance burden significant

- Standard functionality sufficient

Advantages:

- No infrastructure management

- Predictable costs

- Regular updates/features

- Reduced operational burden

Disadvantages:

- Data migration complexity

- Vendor lock-in

- Customization limitations

- Training requirements

Common Transitions:

- On-premise email → Microsoft 365/Google Workspace

- Self-hosted CRM → Salesforce

- Custom HR system → Workday

- File servers → Dropbox/Box

5. Retire

Description: Decommission applications no longer needed

When to Use:

- Low/no usage

- Duplicate functionality

- EOL applications

- Cost reduction focus

Advantages:

- Immediate cost savings

- Reduced complexity

- Lower security risk

- Simplified portfolio

Process:

- Identify candidates via Discovery Service

- Validate with stakeholders

- Archive data if required

- Decommission infrastructure

Cost Impact:

- Average 10-20% of portfolio can be retired

- Significant TCO reduction

- Reduced migration scope

6. Retain (Revisit)

Description: Keep applications on-premise for now

When to Use:

- Recently upgraded systems

- Compliance constraints

- Complex dependencies

- High migration risk

Considerations:

- Plan for future migration

- Document retention reasons

- Regular reassessment

- Hybrid connectivity may be needed

Migration Phases and Timeline

Phase 1: Assessment (2-4 weeks)

Objectives:

- Discover all applications and dependencies

- Classify workloads by 6R strategy

- Estimate costs and resources

- Identify risks and constraints

Activities:

# scripts/assessment_automation.py

import boto3

import json

from typing import Dict, List

from datetime import datetime

class MigrationAssessment:

"""Automated migration assessment using AWS Discovery Service"""

def __init__(self, region: str = 'eu-central-1'):

self.discovery = boto3.client('discovery', region_name=region)

self.pricing = boto3.client('pricing', region_name='us-east-1')

self.ce = boto3.client('ce', region_name=region)

def discover_on_premise_inventory(self) -> Dict:

"""

Discover on-premise infrastructure using Discovery Agent

Returns inventory of servers, applications, and dependencies

"""

# Start discovery agents (deployed on-premise)

agents = self.discovery.describe_agents()

inventory = {

'servers': [],

'applications': [],

'dependencies': [],

'timestamp': datetime.utcnow().isoformat()

}

# List discovered servers

servers = self.discovery.describe_configurations(

configurationType='SERVER'

)

for server in servers.get('configurations', []):

inventory['servers'].append({

'id': server.get('server.configurationId'),

'hostname': server.get('server.hostName'),

'os': server.get('server.osName'),

'cpu_count': server.get('server.cpuCount'),

'memory_mb': server.get('server.totalRamInMB'),

'network_interfaces': server.get('server.networkInterfaceCount'),

})

# Map applications

applications = self.discovery.list_applications()

for app in applications.get('applications', []):

app_id = app['id']

# Get application dependencies

dependencies = self.discovery.describe_configurations(

configurationType='SERVER',

filters=[{

'name': 'applicationConfigurationId',

'values': [app_id],

'condition': 'EQUALS'

}]

)

inventory['applications'].append({

'id': app_id,

'name': app.get('name'),

'description': app.get('description'),

'server_count': len(dependencies.get('configurations', []))

})

return inventory

def calculate_aws_costs(

self,

server_specs: List[Dict]

) -> Dict:

"""

Estimate AWS costs for discovered servers

Uses AWS Pricing API to calculate EC2, storage, and transfer costs

"""

total_monthly_cost = 0

cost_breakdown = []

for server in server_specs:

# Determine appropriate EC2 instance type

instance_type = self._recommend_instance_type(

cpu_count=server['cpu_count'],

memory_mb=server['memory_mb']

)

# Get EC2 pricing

ec2_cost = self._get_ec2_pricing(instance_type, 'eu-central-1')

# Estimate EBS storage cost (100GB default)

storage_cost = self._get_ebs_pricing(100, 'eu-central-1')

server_monthly_cost = ec2_cost + storage_cost

total_monthly_cost += server_monthly_cost

cost_breakdown.append({

'server': server['hostname'],

'recommended_instance': instance_type,

'monthly_cost': round(server_monthly_cost, 2)

})

return {

'total_monthly_cost': round(total_monthly_cost, 2),

'cost_breakdown': cost_breakdown,

'assumptions': {

'region': 'eu-central-1',

'storage_per_server_gb': 100,

'monthly_hours': 730,

'os': 'Linux'

}

}

def _recommend_instance_type(

self,

cpu_count: int,

memory_mb: int

) -> str:

"""Recommend EC2 instance type based on specs"""

memory_gb = memory_mb / 1024

# Simple instance type mapping

if cpu_count <= 2 and memory_gb <= 4:

return 't3.medium'

elif cpu_count <= 2 and memory_gb <= 8:

return 't3.large'

elif cpu_count <= 4 and memory_gb <= 16:

return 'm6i.xlarge'

elif cpu_count <= 8 and memory_gb <= 32:

return 'm6i.2xlarge'

elif cpu_count <= 16 and memory_gb <= 64:

return 'm6i.4xlarge'

else:

return 'm6i.8xlarge'

def _get_ec2_pricing(self, instance_type: str, region: str) -> float:

"""Get EC2 on-demand pricing"""

try:

response = self.pricing.get_products(

ServiceCode='AmazonEC2',

Filters=[

{'Type': 'TERM_MATCH', 'Field': 'instanceType', 'Value': instance_type},

{'Type': 'TERM_MATCH', 'Field': 'location', 'Value': 'EU (Frankfurt)'},

{'Type': 'TERM_MATCH', 'Field': 'operatingSystem', 'Value': 'Linux'},

{'Type': 'TERM_MATCH', 'Field': 'tenancy', 'Value': 'Shared'},

{'Type': 'TERM_MATCH', 'Field': 'preInstalledSw', 'Value': 'NA'},

]

)

price_list = json.loads(response['PriceList'][0])

on_demand = price_list['terms']['OnDemand']

price_dimensions = list(on_demand.values())[0]['priceDimensions']

hourly_price = float(list(price_dimensions.values())[0]['pricePerUnit']['USD'])

return hourly_price * 730 # Monthly cost

except Exception as e:

print(f"Pricing error for {instance_type}: {str(e)}")

return 100 # Default estimate

def _get_ebs_pricing(self, size_gb: int, region: str) -> float:

"""Get EBS GP3 pricing"""

# GP3 pricing: ~$0.08/GB-month in eu-central-1

return size_gb * 0.08

def classify_workloads(

self,

inventory: Dict

) -> Dict:

"""

Classify applications by 6R strategy

Uses heuristics based on application characteristics

"""

classifications = {

'rehost': [],

'replatform': [],

'refactor': [],

'repurchase': [],

'retire': [],

'retain': []

}

for app in inventory['applications']:

# Simple classification logic (in reality, this requires analysis)

server_count = app['server_count']

app_name = app['name'].lower()

if 'legacy' in app_name or 'old' in app_name:

if server_count == 0:

classifications['retire'].append(app)

else:

classifications['rehost'].append(app)

elif 'database' in app_name or 'db' in app_name:

classifications['replatform'].append(app)

elif 'web' in app_name or 'api' in app_name:

if server_count > 5:

classifications['refactor'].append(app)

else:

classifications['replatform'].append(app)

elif 'sap' in app_name or 'oracle' in app_name:

classifications['retain'].append(app)

else:

classifications['rehost'].append(app)

return classifications

def generate_assessment_report(

self,

output_file: str = 'migration_assessment.json'

) -> Dict:

"""Generate comprehensive migration assessment report"""

print("Discovering on-premise inventory...")

inventory = self.discover_on_premise_inventory()

print("Calculating AWS costs...")

costs = self.calculate_aws_costs(inventory['servers'])

print("Classifying workloads...")

classifications = self.classify_workloads(inventory)

report = {

'assessment_date': datetime.utcnow().isoformat(),

'inventory_summary': {

'total_servers': len(inventory['servers']),

'total_applications': len(inventory['applications']),

},

'cost_analysis': costs,

'migration_strategy': {

'rehost_count': len(classifications['rehost']),

'replatform_count': len(classifications['replatform']),

'refactor_count': len(classifications['refactor']),

'repurchase_count': len(classifications['repurchase']),

'retire_count': len(classifications['retire']),

'retain_count': len(classifications['retain']),

},

'classifications': classifications,

'recommendations': self._generate_recommendations(classifications, costs)

}

with open(output_file, 'w') as f:

json.dump(report, f, indent=2, default=str)

print(f"\nAssessment report saved to {output_file}")

return report

def _generate_recommendations(

self,

classifications: Dict,

costs: Dict

) -> List[str]:

"""Generate migration recommendations"""

recommendations = []

# Retire recommendations

if classifications['retire']:

recommendations.append(

f"Immediately retire {len(classifications['retire'])} unused applications "

f"to reduce migration scope and save costs"

)

# Rehost quick wins

if classifications['rehost']:

recommendations.append(

f"Start with rehosting {len(classifications['rehost'])} applications "

f"using AWS MGN for quick migration wins"

)

# Database replatform

if classifications['replatform']:

recommendations.append(

f"Replatform {len(classifications['replatform'])} database workloads "

f"to Amazon RDS/Aurora for reduced operational overhead"

)

# Cost optimization

total_cost = costs['total_monthly_cost']

if total_cost > 10000:

potential_savings = total_cost * 0.3 # 30% savings with Reserved Instances

recommendations.append(

f"Consider Reserved Instances for {potential_savings:.2f} USD/month savings "

f"(~30% of {total_cost:.2f} USD/month)"

)

return recommendations

# Example usage

if __name__ == '__main__':

assessor = MigrationAssessment(region='eu-central-1')

# Generate assessment

report = assessor.generate_assessment_report()

print("\n=== Migration Assessment Summary ===")

print(f"Total servers discovered: {report['inventory_summary']['total_servers']}")

print(f"Total applications: {report['inventory_summary']['total_applications']}")

print(f"\nEstimated monthly AWS cost: ${report['cost_analysis']['total_monthly_cost']}")

print(f"\n6R Strategy Distribution:")

for strategy, count in report['migration_strategy'].items():

print(f" {strategy}: {count}")

print(f"\nRecommendations:")

for i, rec in enumerate(report['recommendations'], 1):

print(f" {i}. {rec}")Phase 2: Planning (4-6 weeks)

Objectives:

- Design target AWS architecture

- Create detailed migration plan

- Set up AWS landing zone

- Establish migration tooling

AWS Migration Hub Setup:

// lib/migration-hub-stack.ts

import * as cdk from 'aws-cdk-lib';

import * as iam from 'aws-cdk-lib/aws-iam';

import * as s3 from 'aws-cdk-lib/aws-s3';

import { Construct } from 'constructs';

export class MigrationHubStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// S3 bucket for migration artifacts

const migrationBucket = new s3.Bucket(this, 'MigrationArtifacts', {

bucketName: `migration-artifacts-${this.account}`,

versioned: true,

encryption: s3.BucketEncryption.S3_MANAGED,

lifecycleRules: [

{

id: 'DeleteOldArtifacts',

expiration: cdk.Duration.days(90),

},

],

removalPolicy: cdk.RemovalPolicy.RETAIN,

});

// IAM role for Migration Hub

const migrationHubRole = new iam.Role(this, 'MigrationHubRole', {

assumedBy: new iam.ServicePrincipal('migrationhub.amazonaws.com'),

description: 'Role for AWS Migration Hub',

managedPolicies: [

iam.ManagedPolicy.fromAwsManagedPolicyName('AWSMigrationHubFullAccess'),

iam.ManagedPolicy.fromAwsManagedPolicyName('ApplicationDiscoveryServiceFullAccess'),

],

});

// IAM role for Application Migration Service (MGN)

const mgnRole = new iam.Role(this, 'MGNRole', {

assumedBy: new iam.ServicePrincipal('mgn.amazonaws.com'),

description: 'Role for AWS Application Migration Service',

inlinePolicies: {

MGNPolicy: new iam.PolicyDocument({

statements: [

new iam.PolicyStatement({

effect: iam.Effect.ALLOW,

actions: [

'ec2:DescribeInstances',

'ec2:DescribeSnapshots',

'ec2:DescribeVolumes',

'ec2:CreateSnapshot',

'ec2:CreateTags',

'ec2:CreateVolume',

'ec2:DeleteSnapshot',

'ec2:DeleteVolume',

'ec2:DescribeImages',

'ec2:DescribeInstanceAttribute',

'ec2:DescribeInstanceStatus',

'ec2:DescribeSecurityGroups',

'ec2:DescribeSubnets',

'ec2:DescribeVpcs',

'ec2:ModifyInstanceAttribute',

'ec2:RunInstances',

'ec2:StartInstances',

'ec2:StopInstances',

'ec2:TerminateInstances',

],

resources: ['*'],

}),

new iam.PolicyStatement({

effect: iam.Effect.ALLOW,

actions: [

's3:GetObject',

's3:PutObject',

's3:DeleteObject',

],

resources: [migrationBucket.arnForObjects('*')],

}),

],

}),

},

});

// Output

new cdk.CfnOutput(this, 'MigrationBucketName', {

value: migrationBucket.bucketName,

description: 'S3 bucket for migration artifacts',

});

new cdk.CfnOutput(this, 'MigrationHubRoleArn', {

value: migrationHubRole.roleArn,

description: 'IAM role for Migration Hub',

});

new cdk.CfnOutput(this, 'MGNRoleArn', {

value: mgnRole.roleArn,

description: 'IAM role for Application Migration Service',

});

}

}Phase 3: Execution (8-12 weeks)

Database Migration with DMS:

// lib/dms-migration-stack.ts

import * as cdk from 'aws-cdk-lib';

import * as dms from 'aws-cdk-lib/aws-dms';

import * as ec2 from 'aws-cdk-lib/aws-ec2';

import * as rds from 'aws-cdk-lib/aws-rds';

import * as iam from 'aws-cdk-lib/aws-iam';

import { Construct } from 'constructs';

export class DMSMigrationStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

const vpc = ec2.Vpc.fromLookup(this, 'VPC', {

isDefault: false,

});

// DMS Subnet Group

const dmsSubnetGroup = new dms.CfnReplicationSubnetGroup(

this,

'DMSSubnetGroup',

{

replicationSubnetGroupDescription: 'Subnet group for DMS replication',

subnetIds: vpc.privateSubnets.map(subnet => subnet.subnetId),

replicationSubnetGroupIdentifier: 'dms-subnet-group',

}

);

// DMS Replication Instance

const replicationInstance = new dms.CfnReplicationInstance(

this,

'DMSReplicationInstance',

{

replicationInstanceClass: 'dms.c5.xlarge',

replicationInstanceIdentifier: 'dms-replication-instance',

allocatedStorage: 100,

multiAz: true,

engineVersion: '3.5.1',

replicationSubnetGroupIdentifier: dmsSubnetGroup.replicationSubnetGroupIdentifier,

publiclyAccessible: false,

}

);

replicationInstance.addDependency(dmsSubnetGroup);

// Source Endpoint (On-premise MySQL)

const sourceEndpoint = new dms.CfnEndpoint(this, 'SourceEndpoint', {

endpointType: 'source',

engineName: 'mysql',

endpointIdentifier: 'source-mysql-on-premise',

serverName: 'on-premise-mysql.company.local', // On-premise server

port: 3306,

username: 'dms_user',

password: 'secure_password', // Should use Secrets Manager

databaseName: 'production_db',

sslMode: 'require',

});

// Target Endpoint (Amazon RDS)

const targetEndpoint = new dms.CfnEndpoint(this, 'TargetEndpoint', {

endpointType: 'target',

engineName: 'aurora-postgresql',

endpointIdentifier: 'target-rds-aurora',

serverName: 'aurora-cluster.xxxxx.eu-central-1.rds.amazonaws.com',

port: 5432,

username: 'admin',

password: 'secure_password', // Should use Secrets Manager

databaseName: 'migrated_db',

sslMode: 'require',

});

// DMS Migration Task

const migrationTask = new dms.CfnReplicationTask(

this,

'MigrationTask',

{

replicationTaskIdentifier: 'mysql-to-aurora-migration',

replicationInstanceArn: replicationInstance.ref,

sourceEndpointArn: sourceEndpoint.ref,

targetEndpointArn: targetEndpoint.ref,

migrationType: 'full-load-and-cdc', // Full load + change data capture

tableMappings: JSON.stringify({

rules: [

{

'rule-type': 'selection',

'rule-id': '1',

'rule-name': 'include-all-tables',

'object-locator': {

'schema-name': '%',

'table-name': '%',

},

'rule-action': 'include',

},

{

'rule-type': 'transformation',

'rule-id': '2',

'rule-name': 'convert-to-lowercase',

'rule-target': 'table',

'object-locator': {

'schema-name': '%',

'table-name': '%',

},

'rule-action': 'convert-lowercase',

},

],

}),

replicationTaskSettings: JSON.stringify({

Logging: {

EnableLogging: true,

LogComponents: [

{

Id: 'SOURCE_CAPTURE',

Severity: 'LOGGER_SEVERITY_DEFAULT',

},

{

Id: 'TARGET_LOAD',

Severity: 'LOGGER_SEVERITY_DEFAULT',

},

],

},

ControlTablesSettings: {

ControlSchema: 'dms_control',

HistoryTimeslotInMinutes: 5,

},

FullLoadSettings: {

TargetTablePrepMode: 'DROP_AND_CREATE',

MaxFullLoadSubTasks: 8,

},

ChangeDataCaptureSettings: {

StreamBufferSizeInMB: 16,

StreamBufferCount: 3,

},

}),

}

);

migrationTask.addDependency(sourceEndpoint);

migrationTask.addDependency(targetEndpoint);

migrationTask.addDependency(replicationInstance);

// CloudWatch Alarms for DMS

const replicationLag = new cdk.aws_cloudwatch.Alarm(

this,

'ReplicationLagAlarm',

{

alarmName: 'dms-replication-lag',

metric: new cdk.aws_cloudwatch.Metric({

namespace: 'AWS/DMS',

metricName: 'CDCLatencySource',

dimensionsMap: {

ReplicationInstanceIdentifier: replicationInstance.replicationInstanceIdentifier!,

ReplicationTaskIdentifier: migrationTask.replicationTaskIdentifier!,

},

statistic: 'Average',

period: cdk.Duration.minutes(5),

}),

threshold: 300, // 5 minutes lag

evaluationPeriods: 2,

}

);

// Outputs

new cdk.CfnOutput(this, 'ReplicationInstanceEndpoint', {

value: replicationInstance.attrReplicationInstancePublicIpAddresses.toString(),

description: 'DMS Replication Instance endpoint',

});

new cdk.CfnOutput(this, 'MigrationTaskArn', {

value: migrationTask.ref,

description: 'DMS Migration Task ARN',

});

}

}Server Migration with AWS MGN:

# scripts/mgn_migration.py

import boto3

import time

from typing import Dict, List

from datetime import datetime

class MGNMigration:

"""AWS Application Migration Service (MGN) automation"""

def __init__(self, region: str = 'eu-central-1'):

self.mgn = boto3.client('mgn', region_name=region)

self.ec2 = boto3.client('ec2', region_name=region)

self.region = region

def initialize_mgn_service(self) -> Dict:

"""Initialize MGN service in AWS account"""

try:

response = self.mgn.initialize_service()

print("MGN service initialized successfully")

return response

except self.mgn.exceptions.AccessDeniedException:

print("MGN already initialized")

return {}

def create_replication_configuration_template(

self,

subnet_id: str,

security_group_ids: List[str],

staging_disk_type: str = 'GP3'

) -> str:

"""Create replication configuration template"""

template = self.mgn.create_replication_configuration_template(

associateDefaultSecurityGroup=False,

bandwidthThrottling=0, # No throttling

createPublicIP=False,

dataPlaneRouting='PRIVATE_IP',

defaultLargeStagingDiskType=staging_disk_type,

ebsEncryption='DEFAULT', # Use default EBS encryption

replicationServerInstanceType='t3.medium',

replicationServersSecurityGroupsIDs=security_group_ids,

stagingAreaSubnetId=subnet_id,

stagingAreaTags={

'Purpose': 'MGN-Staging',

'Environment': 'Migration'

},

useDedicatedReplicationServer=False

)

template_id = template['replicationConfigurationTemplateID']

print(f"Created replication template: {template_id}")

return template_id

def install_replication_agent(

self,

server_ip: str,

server_username: str,

server_password: str

) -> str:

"""

Instructions to install MGN replication agent on source server

Note: This is a guide - actual installation happens on source server

"""

installation_commands = f"""

# AWS MGN Replication Agent Installation

## For Linux Servers:

wget -O ./aws-replication-installer-init.py https://aws-application-migration-service-{self.region}.s3.{self.region}.amazonaws.com/latest/linux/aws-replication-installer-init.py

sudo python3 aws-replication-installer-init.py --region {self.region} --no-prompt

## For Windows Servers:

# Download installer from:

# https://aws-application-migration-service-{self.region}.s3.{self.region}.amazonaws.com/latest/windows/AwsReplicationWindowsInstaller.exe

# Run installer with:

# AwsReplicationWindowsInstaller.exe --region {self.region} --no-prompt

## Verify installation:

sudo systemctl status aws-replication

## Check replication status in AWS Console:

# Navigate to AWS MGN -> Source servers

"""

print(installation_commands)

return installation_commands

def monitor_source_servers(self) -> List[Dict]:

"""Monitor all source servers being replicated"""

response = self.mgn.describe_source_servers()

servers = []

for server in response.get('items', []):

server_info = {

'server_id': server['sourceServerID'],

'hostname': server.get('sourceProperties', {}).get('identificationHints', {}).get('hostname'),

'replication_status': server.get('dataReplicationInfo', {}).get('dataReplicationState'),

'last_seen': server.get('dataReplicationInfo', {}).get('lagDuration'),

'replicated_disks': len(server.get('dataReplicationInfo', {}).get('replicatedDisks', [])),

}

servers.append(server_info)

return servers

def launch_test_instance(

self,

source_server_id: str

) -> str:

"""Launch test instance for migration validation"""

response = self.mgn.start_test(

sourceServerIDs=[source_server_id]

)

job_id = response['job']['jobID']

print(f"Test instance launch initiated: {job_id}")

# Monitor test job

while True:

job_status = self.mgn.describe_jobs(

filters={'jobIDs': [job_id]}

)

status = job_status['items'][0]['status']

print(f"Test launch status: {status}")

if status == 'COMPLETED':

print("Test instance launched successfully")

break

elif status in ['FAILED', 'PENDING']:

raise Exception(f"Test launch failed with status: {status}")

time.sleep(30)

return job_id

def cutover_server(

self,

source_server_id: str

) -> str:

"""Perform final cutover for source server"""

# Finalize replication

response = self.mgn.start_cutover(

sourceServerIDs=[source_server_id]

)

job_id = response['job']['jobID']

print(f"Cutover initiated: {job_id}")

# Monitor cutover job

while True:

job_status = self.mgn.describe_jobs(

filters={'jobIDs': [job_id]}

)

status = job_status['items'][0]['status']

print(f"Cutover status: {status}")

if status == 'COMPLETED':

print("Cutover completed successfully")

break

elif status in ['FAILED', 'PENDING']:

raise Exception(f"Cutover failed with status: {status}")

time.sleep(30)

return job_id

def finalize_cutover(

self,

source_server_id: str

) -> None:

"""Finalize cutover and mark source server for archival"""

response = self.mgn.finalize_cutover(

sourceServerID=source_server_id

)

print(f"Cutover finalized for server: {source_server_id}")

print("Source server marked for archival")

def generate_migration_report(

self,

output_file: str = 'mgn_migration_report.txt'

) -> None:

"""Generate migration progress report"""

servers = self.monitor_source_servers()

report = []

report.append("=== AWS MGN Migration Report ===")

report.append(f"Generated: {datetime.utcnow().isoformat()}\n")

report.append(f"Total servers: {len(servers)}\n")

status_counts = {}

for server in servers:

status = server['replication_status']

status_counts[status] = status_counts.get(status, 0) + 1

report.append("Replication Status Summary:")

for status, count in status_counts.items():

report.append(f" {status}: {count} servers")

report.append("\nServer Details:")

for server in servers:

report.append(f"\nServer: {server['hostname']}")

report.append(f" ID: {server['server_id']}")

report.append(f" Replication Status: {server['replication_status']}")

report.append(f" Replicated Disks: {server['replicated_disks']}")

report_text = '\n'.join(report)

with open(output_file, 'w') as f:

f.write(report_text)

print(f"\nMigration report saved to {output_file}")

print(report_text)

# Example usage

if __name__ == '__main__':

mgn = MGNMigration(region='eu-central-1')

# Initialize MGN

mgn.initialize_mgn_service()

# Create replication template

template_id = mgn.create_replication_configuration_template(

subnet_id='subnet-xxxxx',

security_group_ids=['sg-xxxxx']

)

# Print agent installation instructions

mgn.install_replication_agent(

server_ip='10.0.1.100',

server_username='admin',

server_password='password'

)

# Monitor servers

print("\n=== Source Servers ===")

servers = mgn.monitor_source_servers()

for server in servers:

print(f"{server['hostname']}: {server['replication_status']}")

# Generate report

mgn.generate_migration_report()Phase 4: Optimization (Ongoing)

Post-Migration Cost Optimization:

- Right-size instances based on actual usage

- Implement Reserved Instances/Savings Plans

- Enable Auto Scaling where appropriate

- Implement S3 Intelligent-Tiering

- Use AWS Compute Optimizer recommendations

Performance Optimization:

- Implement CloudFront for content delivery

- Enable RDS Performance Insights

- Use ElastiCache for frequently accessed data

- Implement proper monitoring with CloudWatch

Migration Timeline

Week 1-4: Assessment

├── Application discovery

├── Dependency mapping

├── Cost analysis

└── Strategy definition

Week 5-10: Planning

├── AWS account setup

├── Network design (VPC, subnets, connectivity)

├── Security configuration (IAM, KMS, security groups)

├── Migration tools setup (Migration Hub, DMS, MGN)

├── Pilot application selection

└── Detailed migration plan

Week 11-22: Execution

├── Wave 1: Pilot applications (2 weeks)

│ ├── Rehost 2-3 simple applications

│ ├── Validate process

│ └── Lessons learned

├── Wave 2: Databases (3 weeks)

│ ├── DMS setup and testing

│ ├── Full load migration

│ └── CDC replication

├── Wave 3: Core applications (4 weeks)

│ ├── MGN replication

│ ├── Testing instances

│ └── Cutover execution

└── Wave 4: Remaining workloads (3 weeks)

├── Batch migrations

└── Final cutover

Week 23+: Optimization

├── Cost optimization

├── Performance tuning

├── Security hardening

└── Operational excellenceBest Practices

1. Planning

- Start with comprehensive discovery

- Create detailed dependency maps

- Define clear success criteria

- Plan for rollback scenarios

- Communicate extensively with stakeholders

2. Execution

- Migrate in waves, not big bang

- Test thoroughly before cutover

- Use automation wherever possible

- Maintain detailed runbooks

- Have rollback procedures ready

3. Validation

- Verify data integrity post-migration

- Perform load testing

- Validate all integrations

- Check security configurations

- Review cost against estimates

4. Optimization

- Continuous cost monitoring

- Regular performance reviews

- Implement AWS best practices

- Leverage managed services

- Automate operational tasks

Conclusion

Successful on-premise to AWS migration requires a structured approach, proven strategies, and the right tools. By following the 6R framework, leveraging AWS migration services, and executing in carefully planned phases, organizations can achieve successful cloud transformations with minimal risk and maximum benefit.

Key Takeaways:

- Use 6R strategies to classify workloads appropriately

- Start with comprehensive discovery and assessment

- Leverage AWS Migration Hub for centralized tracking

- Use DMS for database migrations with minimal downtime

- Deploy MGN for server rehosting migrations

- Execute in waves with thorough testing

- Optimize continuously post-migration

Ready to start your AWS migration journey? Forrict provides end-to-end migration services, from initial assessment to post-migration optimization, ensuring successful cloud transformation for Dutch businesses.

Resources

- AWS Migration Hub

- AWS Application Migration Service

- AWS Database Migration Service

- AWS Cloud Adoption Framework

- Migration Evaluator

Forrict Team

AWS expert and consultant at Forrict, specializing in cloud architecture and AWS best practices for Dutch businesses.